Back to Main Page

{

"Features":[

{

"Read real-time video data via webcam":"Get real-time video data for body tracking processing through a webcam on a desktop or laptop, or a camera on a mobile device"

},

{

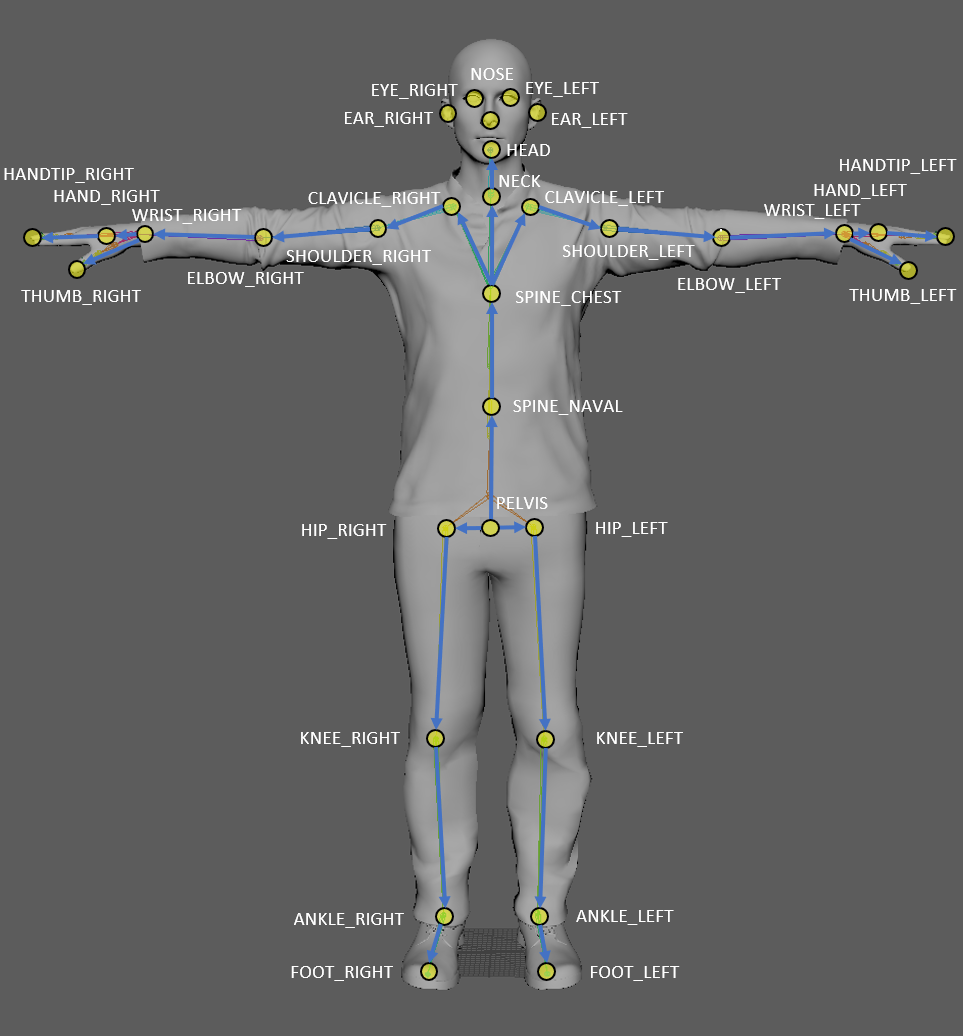

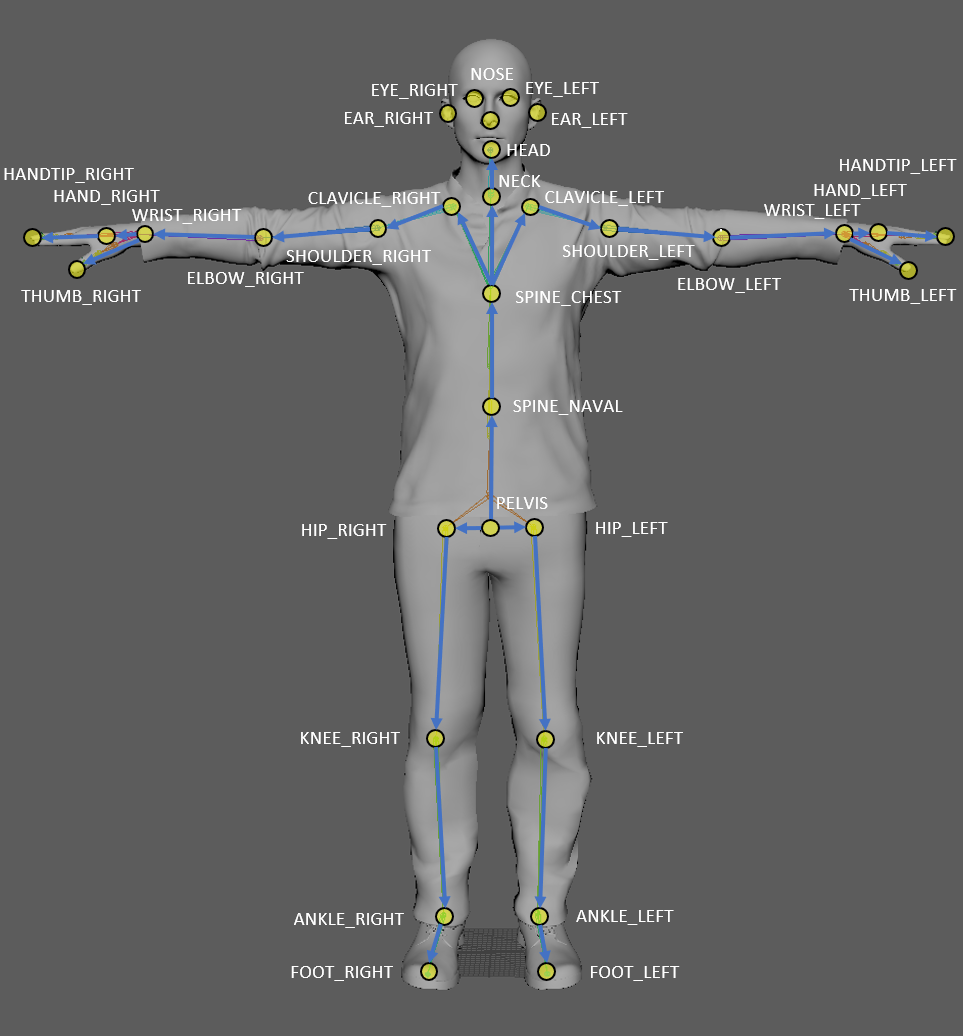

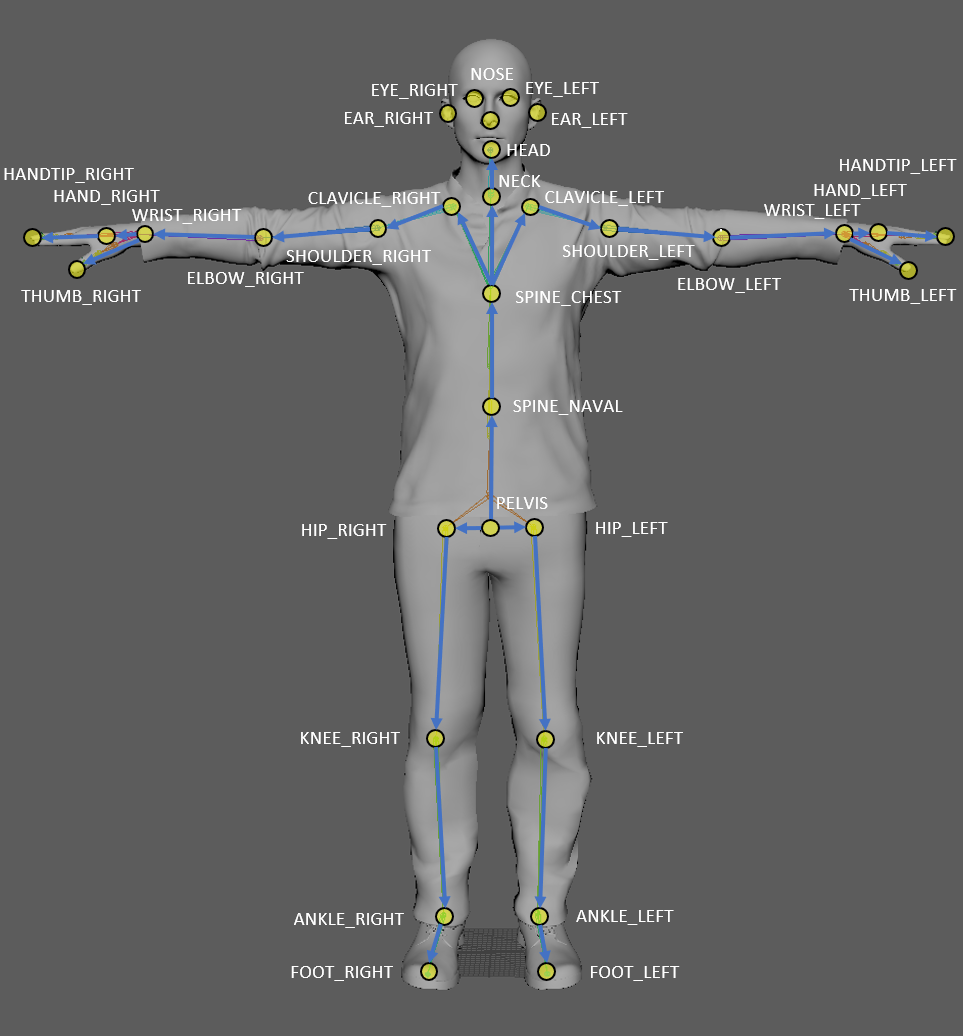

"Extract the body's joint locations in real time from image data":"Using the API of the given library, data such as the location of human body joints are tracked and extracted in real time from image data obtained from webcams"

}

]

}

The purpose of the Body Tracking project is to extract data such as the three-dimensional position and angle of each part of the human body from video stream data

The project was carried out in two main ways

The first method is using Microsoft's Azure Kinect DK, and the second method is to process video stream data received through a webcam on a web page using the body tracking model disclosed as an open source

The way Azure Kinect DK is used is very simple, but you just need to call the equipment provided by Microsoft and the corresponding API

The above example image is a program created in Windows Form, and the core file code is as follows

using System;

using System.Collections.Generic;

using System.Drawing;

using System.Threading.Tasks;

using System.Windows.Forms;

using System.Buffers;

using Microsoft.Azure.Kinect.Sensor;

using Microsoft.Azure.Kinect.BodyTracking;

using Image = Microsoft.Azure.Kinect.Sensor.Image;

using BitmapData = System.Drawing.Imaging.BitmapData;

namespace AzureKineticTest_1

{

public partial class Form1 : Form

{

private Device kinectDevice;

private DeviceConfiguration deviceConfiguration;

...

public Form1()

{

InitializeComponent();

InitKinect();

}

~Form1()

{

kinectDevice.StopCameras();

kinectDevice.Dispose();

tracker.Dispose();

isActive = false;

}

private async Task CalculateColor()

{

while (isActive)

{

using (Microsoft.Azure.Kinect.Sensor.Capture capture = await Task.Run(() => kinectDevice.GetCapture()).ConfigureAwait(true))

{

unsafe

{

Image colorImage = capture.Color;

using (MemoryHandle pin = colorImage.Memory.Pin())

{

colorBitmap = new Bitmap(colorImage.WidthPixels, colorImage.HeightPixels, colorImage.StrideBytes, System.Drawing.Imaging.PixelFormat.Format32bppArgb, (IntPtr)pin.Pointer);

}

pictureBox_Color.Image = colorBitmap;

}

Update();

}

}

return;

}

private async Task CalculateDepth()

{

while(isActive)

{

using (Capture capture = await Task.Run(() => kinectDevice.GetCapture()).ConfigureAwait(true))

{

unsafe

{

Image depthImage = capture.Depth;

ushort[] depthArr = depthImage.GetPixels().ToArray();

BitmapData bitmapData = depthBitmap.LockBits(new Rectangle(0, 0, depthBitmap.Width, depthBitmap.Height), System.Drawing.Imaging.ImageLockMode.WriteOnly, System.Drawing.Imaging.PixelFormat.Format32bppArgb);

byte* pixels = (byte*)bitmapData.Scan0;

int depth = 0;

int tempIndex = 0;

for (int index = 0; index < depthArr.Length; index++)

{

depth = (int)(255 * (depthArr[index]) / 5000.0);

if (depth < 0 || depth > 255)

{

depth = 0;

}

tempIndex = index * 4;

pixels[tempIndex++] = (byte)depth;

pixels[tempIndex++] = (byte)depth;

pixels[tempIndex++] = (byte)depth;

pixels[tempIndex++] = 255;

}

depthBitmap.UnlockBits(bitmapData);

pictureBox_Depth.Image = depthBitmap;

}

Update();

}

}

return;

}

private async Task CalcuateBodyTracking()

{

var calibration = kinectDevice.GetCalibration(deviceConfiguration.DepthMode, deviceConfiguration.ColorResolution);

TrackerConfiguration trackerConfiguration = new TrackerConfiguration()

{

ProcessingMode = TrackerProcessingMode.Gpu,

SensorOrientation = SensorOrientation.Default

};

tracker = Tracker.Create(

calibration,

new TrackerConfiguration

{

SensorOrientation = SensorOrientation.Default,

ProcessingMode = TrackerProcessingMode.Gpu

}

);

while (isActive)

{

using (Capture capture = await Task.Run(() => { return kinectDevice.GetCapture(); }))

{

tracker.EnqueueCapture(capture);

using (Frame frame = tracker.PopResult())

using (Image color_image = frame.Capture.Color)

{

textBox_BodyTracking.Text = null;

jointData.Clear();

for (uint bodyIndex = 0; bodyIndex < frame.NumberOfBodies; bodyIndex++)

{

Skeleton skeleton = frame.GetBodySkeleton(bodyIndex);

uint id = frame.GetBodyId(bodyIndex);

if(bodyIndex >= 1)

{

textBox_BodyTracking.Text += Environment.NewLine + "------------------------------------------------------" + Environment.NewLine;

}

textBox_BodyTracking.Text += "Person Index : " + bodyIndex + Environment.NewLine;

jointData.Add(new Dictionary());

for (int jointIndex = 0; jointIndex < (int)JointId.Count; jointIndex++)

{

Joint joint = skeleton.GetJoint(jointIndex);

textBox_BodyTracking.Text += "Joint Index : " + jointIndex + " - X : " + joint.Position.X + " / Y : " + joint.Position.Y + " / Z : " + joint.Position.Z + Environment.NewLine;

jointData[(int)bodyIndex].Add((JointId)jointIndex, joint);

}

}

}

}

}

return;

}

...

private void InitKinect()

{

try

{

kinectDevice = Device.Open(0);

deviceConfiguration = new DeviceConfiguration()

{

ColorFormat = ImageFormat.ColorBGRA32,

ColorResolution = ColorResolution.R720p,

DepthMode = DepthMode.NFOV_2x2Binned,

CameraFPS = FPS.FPS15,

SynchronizedImagesOnly = true

};

}

catch (AzureKinectException ex)

{

textBox_Error.Text += "1> Exception is occur during open kinect device" + Environment.NewLine + "1> Please check your device connection" + Environment.NewLine;

textBox_Error.Text += ex.ToString() + Environment.NewLine;

}

}

private void button_CameraCapture_Click(object sender, EventArgs e)

{

if(!isActive)

{

try

{

kinectDevice.StartCameras(deviceConfiguration);

}

catch(AzureKinectException ex)

{

textBox_Error.Text += "1> Exception is occur during start kinect device" + Environment.NewLine + "1> Please check your device connection" + Environment.NewLine;

textBox_Error.Text += ex.ToString() + Environment.NewLine;

}

isActive = true;

InitBitMap();

bool flag = true;

if (checkBox_Color.Checked)

{

flag = false;

CalculateColor();

}

if(checkBox_Depth.Checked)

{

flag = false;

CalculateDepth();

}

if(checkBox_BodyTracking.Checked)

{

flag = false;

CalcuateBodyTracking();

if(jointData == null)

{

jointData = new List>();

}

}

if(flag)

{

textBox_Error.Text += "=== No check box selected ===" + Environment.NewLine;

textBox_Error.Text += "[" + System.DateTime.Now.ToString("hh-mm-ss") + "] > Capture fail time" + Environment.NewLine;

}

else

{

textBox_Error.Text += "=== Capture start ===" + Environment.NewLine;

textBox_Error.Text += "[" + System.DateTime.Now.ToString("hh-mm-ss") + "] > Capture start time" + Environment.NewLine;

}

}

else

{

isActive = false;

kinectDevice.StopCameras();

tracker.Dispose();

colorBitmap.Dispose();

depthBitmap.Dispose();

textBox_Error.Text += "=== Capture end ===" + Environment.NewLine;

textBox_Error.Text += "[" + System.DateTime.Now.ToString("hh-mm-ss") + "] > Capture end time" + Environment.NewLine + Environment.NewLine;

}

}

...

}

}

In this case, it was implemented by combining codes through web search, and consisted of simple html and javascript codes

align=center>Auto Video Stream to Still Image

Take snapshot every

milliseconds

// processing.js

var myVideoStream = document.getElementById('myVideo') // make it a global variable

var myStoredInterval = 0

function getVideo() {

navigator.getMedia = navigator.getUserMedia || navigator.webkitGetUserMedia || navigator.mozGetUserMedia || navigator.msGetUserMedia;

navigator.getMedia({ video: true, audio: false },

function (stream) {

myVideoStream.srcObject = stream

myVideoStream.play();

},

function (error) {

alert('webcam not working');

});

}

function takeSnapshot() {

var myCanvasElement = document.getElementById('myCanvas');

var myCTX = myCanvasElement.getContext('2d');

myCTX.drawImage(myVideoStream, 0, 0, myCanvasElement.width, myCanvasElement.height);

StartTracking()

}

function takeAuto() {

takeSnapshot() // get snapshot right away then wait and repeat

clearInterval(myStoredInterval)

myStoredInterval = setInterval(function () {

takeSnapshot()

}, document.getElementById('myInterval').value);

}

function StartTracking() {

var imageScaleFactor = 0.5;

var outputStride = 16;

var flipHorizontal = false;

var imageElement = document.getElementById('myVideo');

posenet.load().then(function (net) {

return net.estimateSinglePose(imageElement, imageScaleFactor, flipHorizontal, outputStride)

}).then(function (pose) {

console.log(pose);

})

}

The files required for ML are as follows

Related articles and demonstrations are as follows

After testing the two methods, the pros and cons of each were clear

First of all, the difficulty of development becomes easier when Azure Kinect DK is used, but the disadvantages can be pointed out as the fact that the equipment is not cheap and that the available platforms can be limited to some extent because the API provided is based on C#

Next, in the case of a combination of ML models and webcams, the ML model currently tested was written in JavaScript, confirming the possibility of widespread use in the web environment and native, and by using webcams together, it could be easily utilized without spending money for additional equipment. However, since ML models are open-source based, it is unclear whether they are updated or not, and the quality of body tracking results can depend on various issues such as resolution of webcams

{

"References":[

{

"[1] Azure Kinect DK documentation":"https://learn.microsoft.com/en-us/azure/kinect-dk/"

},

{

"[2] Real-time extraction of joint location from image data":"https://codepen.io/rocksetta/pen/BPbaxQ"

},

{

"[3] pose_estimation":"https://github.com/llSourcell/pose_estimation"

}

]

}